A one-way ANOVA is a statistical test used to determine whether or not there is a significant difference between the means of three or more independent groups.

Here’s an example of when we might use a one-way ANOVA:

You randomly split up a class of 90 students into three groups of 30. Each group uses a different studying technique for one month to prepare for an exam. At the end of the month, all of the students take the same exam.

You want to know whether or not the studying technique has an impact on exam scores so you conduct a one-way ANOVA to determine if there is a statistically significant difference between the mean scores of the three groups.

Before we can conduct a one-way ANOVA, we must first check to make sure that three assumptions are met.

1. Normality – Each sample was drawn from a normally distributed population.

2. Equal Variances – The variances of the populations that the samples come from are equal.

3. Independence – The observations in each group are independent of each other and the observations within groups were obtained by a random sample.

If these assumptions aren’t met, then the results of our one-way ANOVA could be unreliable.

In this post, we explain how to check these assumptions along with what to do if any of the assumptions are violated.

Assumption #1: Normality

ANOVA assumes that each sample was drawn from a normally distributed population.

How to check this assumption in R:

To check this assumption, we can use two approaches:

- Check the assumption visually using histograms or Q-Q plots.

- Check the assumption using formal statistical tests like Shapiro-Wilk, Kolmogorov-Smironov, Jarque-Barre, or D’Agostino-Pearson.

For example, suppose we recruit 90 people to participate in a weight-loss experiment in which we randomly assign 30 people to follow either program A, program B, or program C for one month. To see if the program has an impact on weight loss, we want to conduct a one-way ANOVA. The following code illustrates how to check the normality assumption using histograms, Q-Q plots, and a Shapiro-Wilk test.

1. Fit ANOVA Model.

#make this example reproducible set.seed(0) #create data frame data frame(program = rep(c("A", "B", "C"), each = 30), weight_loss = c(runif(30, 0, 3), runif(30, 0, 5), runif(30, 1, 7))) #fit the one-way ANOVA model model

2. Create histogram of response values.

#create histogram

hist(data$weight_loss)

The distribution doesn’t look very normally distributed (e.g. it doesn’t have a “bell” shape), but we can also create a Q-Q plot to get another look at the distribution.

3. Create Q-Q plot of residuals

#create Q-Q plot to compare this dataset to a theoretical normal distribution qqnorm(model$residuals) #add straight diagonal line to plot qqline(model$residuals)

In general, if the data points fall along a straight diagonal line in a Q-Q plot, then the dataset likely follows a normal distribution. In this case, we can see that there is some noticeable departure from the line along the tail ends which might indicate that the data is not normally distributed.

4. Conduct Shapiro-Wilk Test for Normality.

#Conduct Shapiro-Wilk Test for normality shapiro.test(data$weight_loss) #Shapiro-Wilk normality test # #data: data$weight_loss #W = 0.9587, p-value = 0.005999

The Shapiro-Wilk Test tests the null hypothesis that the samples come from a normal distribution vs. the alternative hypothesis that the samples do not come from a normal distribution. In this case, the p-value of the test is 0.005999, which is less than the alpha level of 0.05. This suggests that the samples do not come a normal distribution.

What to do if this assumption is violated:

In general, a one-way ANOVA is considered to be fairly robust against violations of the normality assumption as long as the sample sizes are sufficiently large.

Also, if you have extremely large sample sizes then statistical tests like the Shapiro-Wilk test will almost always tell you that your data is non-normal. For this reason, it’s often best to inspect your data visually using graphs like histograms and Q-Q plots. By simply looking at the graphs, you can get a pretty good idea of whether or not the data is normally distributed.

If the normality assumption is severely violated or if you just want to be extra conservative, you have two choices:

(1) Transform the response values of your data so that the distributions are more normally distributed.

(2) Perform an equivalent non-parametric test such as a Kruskal-Wallis Test that doesn’t require the assumption of normality.

Assumption #2: Equal Variance

ANOVA assumes that the variances of the populations that the samples come from are equal.

How to check this assumption in R:

We can check this assumption in R using two approaches:

- Check the assumption visually using boxplots.

- Check the assumption using a formal statistical tests like Bartlett’s Test.

The following code illustrates how to do so, using the same fake weight-loss dataset we created earlier.

1. Create boxplots.

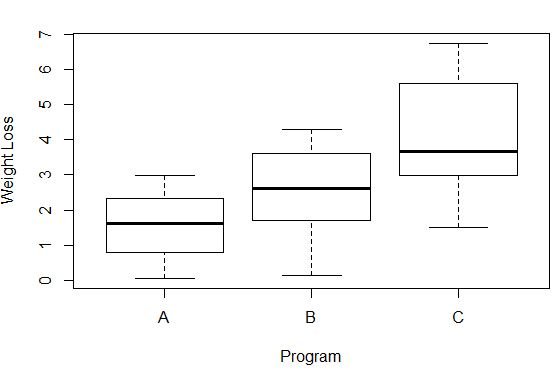

#Create box plots that show distribution of weight loss for each group boxplot(weight_loss ~ program, xlab='Program', ylab='Weight Loss', data=data)

The variance of weight loss in each group can be seen by the length of each box plot. The longer the box, the higher the variance. For example, we can see that the variance is a bit higher for participants in program C compared to both program A and program B.

2. Conduct Bartlett’s Test.

#Create box plots that show distribution of weight loss for each group bartlett.test(weight_loss ~ program, data=data) #Bartlett test of homogeneity of variances # #data: weight_loss by program #Bartlett's K-squared = 8.2713, df = 2, p-value = 0.01599

Bartlett’s Test tests the null hypothesis that the samples have equal variances vs. the alternative hypothesis that the samples do not have equal variances. In this case, the p-value of the test is 0.01599, which is less than the alpha level of 0.05. This suggests that the samples do not all have equal variances.

What to do if this assumption is violated:

In general, a one-way ANOVA is considered to be fairly robust against violations of the equal variances assumption as long as each group has the same sample size.

However, if the sample sizes are not the same and this assumption is severely violated, you could instead run a Kruskal-Wallis Test, which is the non-parametric version of the one-way ANOVA.

Assumption #3: Independence

ANOVA assumes:

- The observations in each group are independent of the observations in every other group.

- The observations within each group were obtained by a random sample.

How to check this assumption:

There is no formal test you can use to verify that the observations in each group are independent and that they were obtained by a random sample. The only way this assumption can be satisfied is if a randomized design was used.

What to do if this assumption is violated:

Unfortunately, there is very little you can do if this assumption is violated. Simply put, if the data was collected in a way where the observations in each group are not independent of observations in other groups, or if the observations within each group were not obtained through a randomized process, the results of the ANOVA will be unreliable.

If this assumption is violated, the best thing to do is to set up the experiment again in a way that uses a randomized design.

Further Reading:

How to Conduct a One-Way ANOVA in R

How to Conduct a One-Way ANOVA in Excel